Microsoft DA-100 : Analyzing Data with Microsoft Power BI Exam DumpsExam Dumps Organized by Martin Hoax |

Latest 2023 Updated Microsoft Analyzing Data with Microsoft Power BI Syllabus

DA-100 Exam Dumps / Braindumps contains Actual Exam Questions

Practice Tests and Free VCE Software - Questions Updated on Daily Basis

Big Discount / Cheapest price & 100% Pass Guarantee

DA-100 Test Center Questions : Download 100% Free DA-100 exam Dumps (PDF and VCE)

Exam Number : DA-100

Exam Name : Analyzing Data with Microsoft Power BI

Vendor Name : Microsoft

Update : Click Here to Check Latest Update

Question Bank : Check Questions

Download DA-100 Practice Test Practice Test with DA-100 VCE

It is our specialty to offer updated, valid, and the latest DA-100 real questions that are verified to be working in a genuine DA-100 exam. We have tested Analyzing Data with Microsoft Power BI questions and answers in the download section of the website for the users to access with one simple click. DA-100 Exam Questions is also updated accordingly.

To achieve success in the Microsoft DA-100 exam, it is highly recommended to obtain reliable DA-100 Test Prep. When it comes to reliability, it is not advisable to rely on free DA-100 Questions and Answers found on the internet since they may be outdated. Taking such a risk may result in wasting valuable time, effort, and money. To ensure that the study materials used are of high quality and reliability, it is recommended to visit killexams.com and download their 100% free deposit. By doing so, the quality of their materials can be assessed. If satisfied, one can proceed to register and download the full version of their DA-100 question bank. Their brain deposits are accurately identified as the exact questions found in the exam, making them 100% guaranteed DA-100 Test Prep that are not available in other free resources.

It is essential to note that passing the Microsoft DA-100 exam requires a thorough understanding of the subject matter, not just the ability to memorize the answers to certain questions. Killexams.com not only provides access to reliable DA-100 Test Prep, but they also help improve one's understanding of the subject by providing guidance on tricky scenarios and questions that may arise in the exam. By taking advantage of their resources such as the DA-100 VCE exam simulator, one can practice taking the exam frequently to assess their preparedness for the actual exam. This, coupled with a solid understanding of the subject matter and reliable study materials, can greatly increase the chances of success in the Microsoft DA-100 exam.

DA-100 Exam Format | DA-100 Course Contents | DA-100 Course Outline | DA-100 Exam Syllabus | DA-100 Exam Objectives

Exam Number : DA-100

Exam Name : Analyzing Data with Microsoft Power BI

This course will discuss the various methods and best practices that are in line with business and technical requirements for modeling, visualizing, and analyzing data with Power BI. The course will also show how to access and process data from a range of data sources including both relational and non-relational data. This course will also explore how to implement proper security standards and policies across the Power BI spectrum including datasets and groups. The course will also discuss how to manage and deploy reports and dashboards for sharing and content distribution. Finally, this course will show how to build paginated reports within the Power BI service and publish them to a workspace for inclusion within Power BI.

Skills Gained

- Ingest, clean, and transform data

- Model data for performance and scalability

- Design and create reports for data analysis

- Apply and perform advanced report analytics

- Manage and share report assets

- Create paginated reports in Power BI

Specifically:

- Understanding core data concepts.

- Knowledge of working with relational data in the cloud.

- Knowledge of working with non-relational data in the cloud.

- Knowledge of data analysis and visualization concepts.

Course outline

Module 1: Get Started with Microsoft Data Analytics

This module explores the different roles in the data space, outlines the important roles and responsibilities of a Data Analysts, and then explores the landscape of the Power BI portfolio.

Lessons

Data Analytics and Microsoft

Getting Started with Power BI

Lab : Getting Started

Getting Started

After completing this module, you will be able to:

Explore the different roles in data

Identify the tasks that are performed by a data analyst

Describe the Power BI landscape of products and services

Use the Power BI service

Module 2: Prepare Data in Power BI

This module explores identifying and retrieving data from various data sources. You will also learn the options for connectivity and data storage, and understand the difference and performance implications of connecting directly to data vs. importing it.

Lessons

Get data from various data sources

Optimize performance

Resolve data errors

Lab : Preparing Data in Power BI Desktop

Prepare Data

After completing this module, you will be able to:

Identify and retrieve data from different data sources

Understand the connection methods and their performance implications

Optimize query performance

Resolve data import errors

Module 3: Clean, Transform, and Load Data in Power BI

This module teaches you the process of profiling and understanding the condition of the data. They will learn how to identify anomalies, look at the size and shape of their data, and perform the proper data cleaning and transforming steps to prepare the data for loading into the model.

Lessons

Data shaping

Enhance the data structure

Data Profiling

Lab : Transforming and Loading Data

Loading Data

After completing this module, students will be able to:

Apply data shape transformations

Enhance the structure of the data

Profile and examine the data

Module 4: Design a Data Model in Power BI

This module teaches the fundamental concepts of designing and developing a data model for proper performance and scalability. This module will also help you understand and tackle many of the common data modeling issues, including relationships, security, and performance.

Lessons

Introduction to data modeling

Working with tables

Dimensions and Hierarchies

Lab : Data Modeling in Power BI Desktop

Create Model Relationships

Configure Tables

Review the model interface

Create Quick Measures

Lab : Advanced Data Modeling in Power BI Desktop

Configure many-to-many relationships

Enforce row-level security

After completing this module, you will be able to:

Understand the basics of data modeling

Define relationships and their cardinality

Implement Dimensions and Hierarchies

Create histograms and rankings

Module 5: Create Model Calculations using DAX in Power BI

This module introduces you to the world of DAX and its true power for enhancing a model. You will learn about aggregations and the concepts of Measures, calculated columns and tables, and Time Intelligence functions to solve calculation and data analysis problems.

Lessons

Introduction to DAX

DAX context

Advanced DAX

Lab : Introduction to DAX in Power BI Desktop

Create calculated tables

Create calculated columns

Create measures

Lab : Advanced DAX in Power BI Desktop

Use the CALCULATE() function to manipulate filter context

use Time Intelligence functions

After completing this module, you will be able to:

Understand DAX

Use DAX for simple formulas and expressions

Create calculated tables and measures

Build simple measures

Work with Time Intelligence and Key Performance Indicators

Module 6: Optimize Model Performance

In this module you are introduced to steps, processes, concepts, and data modeling best practices necessary to optimize a data model for enterprise-level performance.

Lessons

Optimze the model for performance

Optimize DirectQuery Models

Create and manage Aggregations

After completing this module, you will be able to:

Understand the importance of variables

Enhance the data model

Optimize the storage model

Implement aggregations

Module 7: Create Reports

This module introduces you to the fundamental concepts and principles of designing and building a report, including selecting the correct visuals, designing a page layout, and applying basic but critical functionality. The important topic of designing for accessibility is also covered.

Lessons

Design a report

Enhance the report

Lab : Designing a report in Power BI

Create a live connection in Power BI Desktop

Design a report

Configure visual fields adn format properties

Lab : Enhancing Power BI reports with interaction and formatting

Create and configure Sync Slicers

Create a drillthrough page

Apply conditional formatting

Create and use Bookmarks

After completing this module, you will be able to:

Design a report page layout

Select and add effective visualizations

Add basic report functionality

Add report navigation and interactions

Improve report performance

Design for accessibility

Module 8: Create Dashboards

In this module you will learn how to tell a compelling story through the use of dashboards and the different navigation tools available to provide navigation. You will be introduced to features and functionality and how to enhance dashboards for usability and insights.

Lessons

Create a Dashboard

Real-time Dashboards

Enhance a Dashboard

Lab : Designing a report in Power BI Desktop - Part 1

Create a Dashboard

Pin visuals to a Dashboard

Configure a Dashboard tile alert

Use Q&A to create a dashboard tile

After completing this module, students will be able to:

Create a Dashboard

Understand real-time Dashboards

Enhance Dashboard usability

Module 9: Create Paginated Reports in Power BI

This module will teach you about paginated reports, including what they are how they fit into Power BI. You will then learn how to build and publish a report.

Lessons

Paginated report overview

Create Paginated reports

Lab : Creating a Paginated report

Use Power BI Report Builder

Design a multi-page report layout

Define a data source

Define a dataset

Create a report parameter

Export a report to PDF

After completing this module, you will be able to:

Explain paginated reports

Create a paginated report

Create and configure a data source and dataset

Work with charts and tables

Publish a report

Module 10: Perform Advanced Analytics

This module helps you apply additional features to enhance the report for analytical insights in the data, equipping you with the steps to use the report for actual data analysis. You will also perform advanced analytics using AI visuals on the report for even deeper and meaningful data insights.

Lessons

Advanced Analytics

Data Insights through AI visuals

Lab : Data Analysis in Power BI Desktop

Create animated scatter charts

Use teh visual to forecast values

Work with Decomposition Tree visual

Work with the Key Influencers visual

After completing this module, you will be able to:

Explore statistical summary

Use the Analyze feature

Identify outliers in data

Conduct time-series analysis

Use the AI visuals

Use the Advanced Analytics custom visual

Module 11: Create and Manage Workspaces

This module will introduce you to Workspaces, including how to create and manage them. You will also learn how to share content, including reports and dashboards, and then learn how to distribute an App.

Lessons

Creating Workspaces

Sharing and Managing Assets

Lab : Publishing and Sharing Power BI Content

Map security principals to dataset roles

Share a dashboard

Publish an App

After completing this module, you will be able to:

Create and manage a workspace

Understand workspace collaboration

Monitor workspace usage and performance

Distribute an App

Module 12: Manage Datasets in Power BI

In this module you will learn the concepts of managing Power BI assets, including datasets and workspaces. You will also publish datasets to the Power BI service, then refresh and secure them.

Lessons

Parameters

Datasets

After completing this module, you will be able to:

Create and work with parameters

Manage datasets

Configure dataset refresh

Troubleshoot gateway connectivity

Module 13: Row-level security

This module teaches you the steps for implementing and configuring security in Power BI to secure Power BI assets.

Lessons

Security in Power BI

After completing this module, you will be able to:

Understand the aspects of Power BI security

Configure row-level security roles and group memberships

Killexams Review | Reputation | Testimonials | Feedback

Try out these real DA-100 Latest and updated dumps.

I passed the DA-100 exam this month with the help of killexams.com's very reliable preparation questions and answers. I didn't think that braindumps could help me achieve such high marks, but now I know that killexams.com is more than just a dump. It provides you with everything you need to pass the exam and learn everything you need to know, saving your time and energy.

Try out these DA-100 braindumps, It is remarkable!

I successfully passed the DA-100 exam with the help of killexams.com Questions and Answers material and Exam Simulator. The material helped me identify my weak areas and work on them to progress my spirit. This preparation proved to be fruitful, and I passed the exam without any trouble. I wish everyone who uses killexams.com the best of luck and hope they find the material as helpful as I did.

Can I find dumps Questions & Answers of DA-100 exam?

I am grateful to killexams.com for their mock test on DA-100, which helped me pass the exam without any issues. I have also taken a mock test from them for my other exam, and I find it very beneficial. The questions and answers provided by killexams.com are very helpful, and their explanations are incredible. I would give them four stars for their excellent service.

Worked hard on DA-100 books, but everything was in this study guide.

The exercise exam provided by killexams.com was incredible, and I passed the DA-100 exam with a perfect score. It was definitely worth the cost, and I plan to return for my next certification. I would like to express my gratitude for the prep dumps provided by killexams.com, which were extremely useful for coaching and passing the exam. I got every answer correct, thanks to the comprehensive exam preparatory materials.

Outstanding source latest outstanding updated dumps, accurate answers.

I confidently recommend killexams.com DA-100 questions answers and exam simulator to anyone preparing for their DA-100 exam. It is the most updated schooling data for the DA-100 exam and virtually covers the complete DA-100 exam. I vouch for this website as I passed my DA-100 exam last week with its help. The questions are up-to-date and accurate, so I didn't have any problem during the exam and received good marks.

Microsoft BI Actual Questions

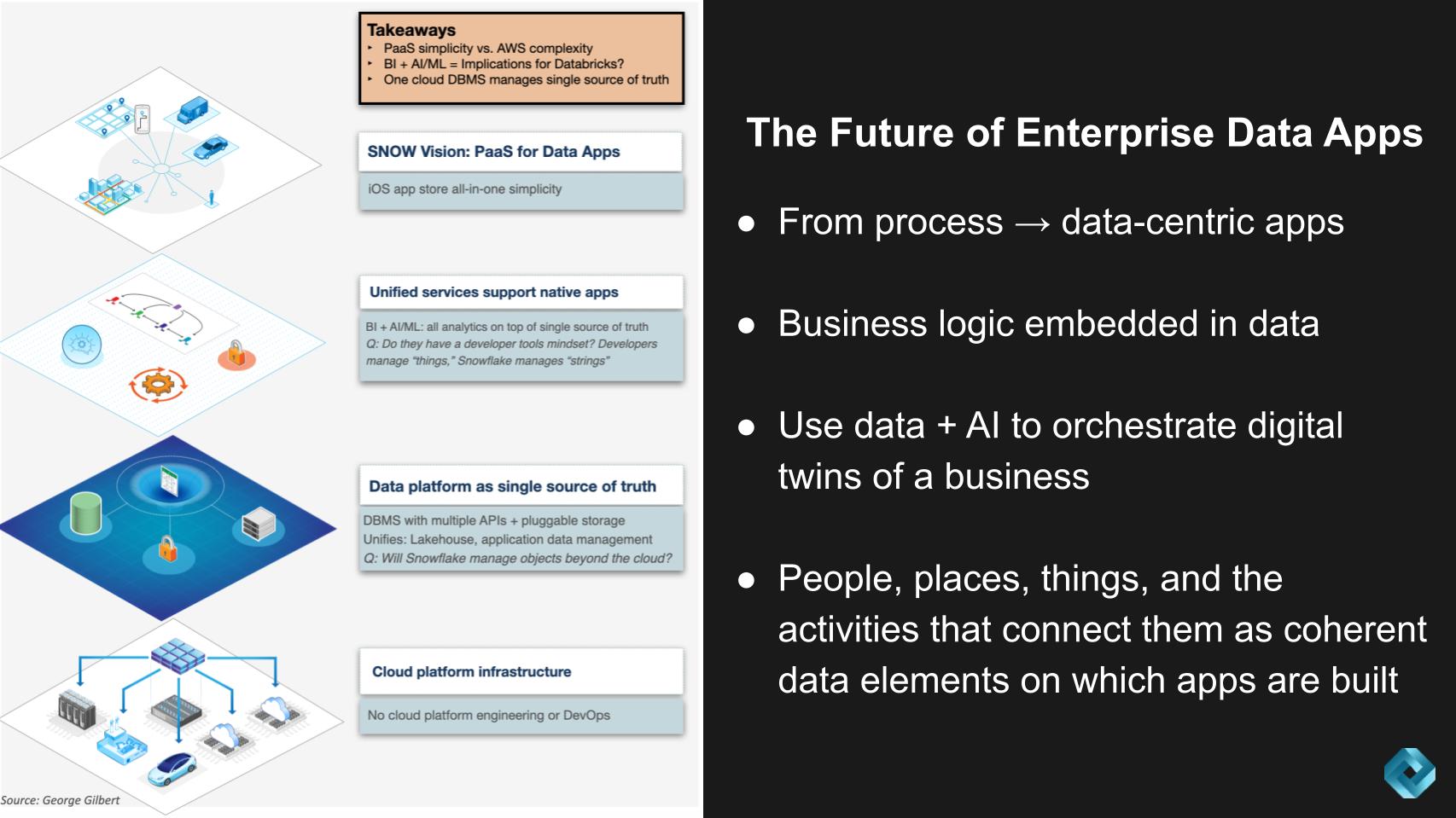

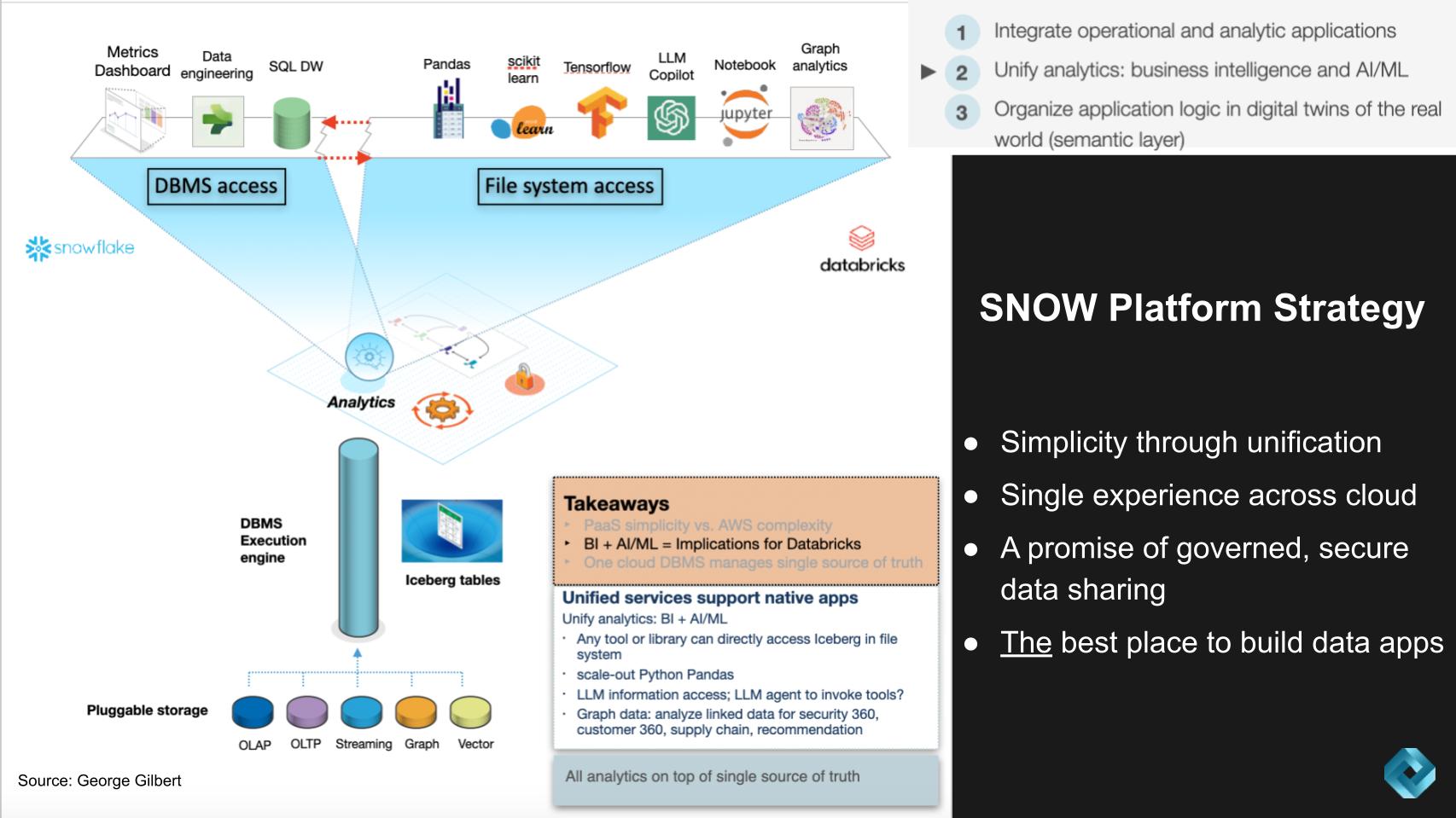

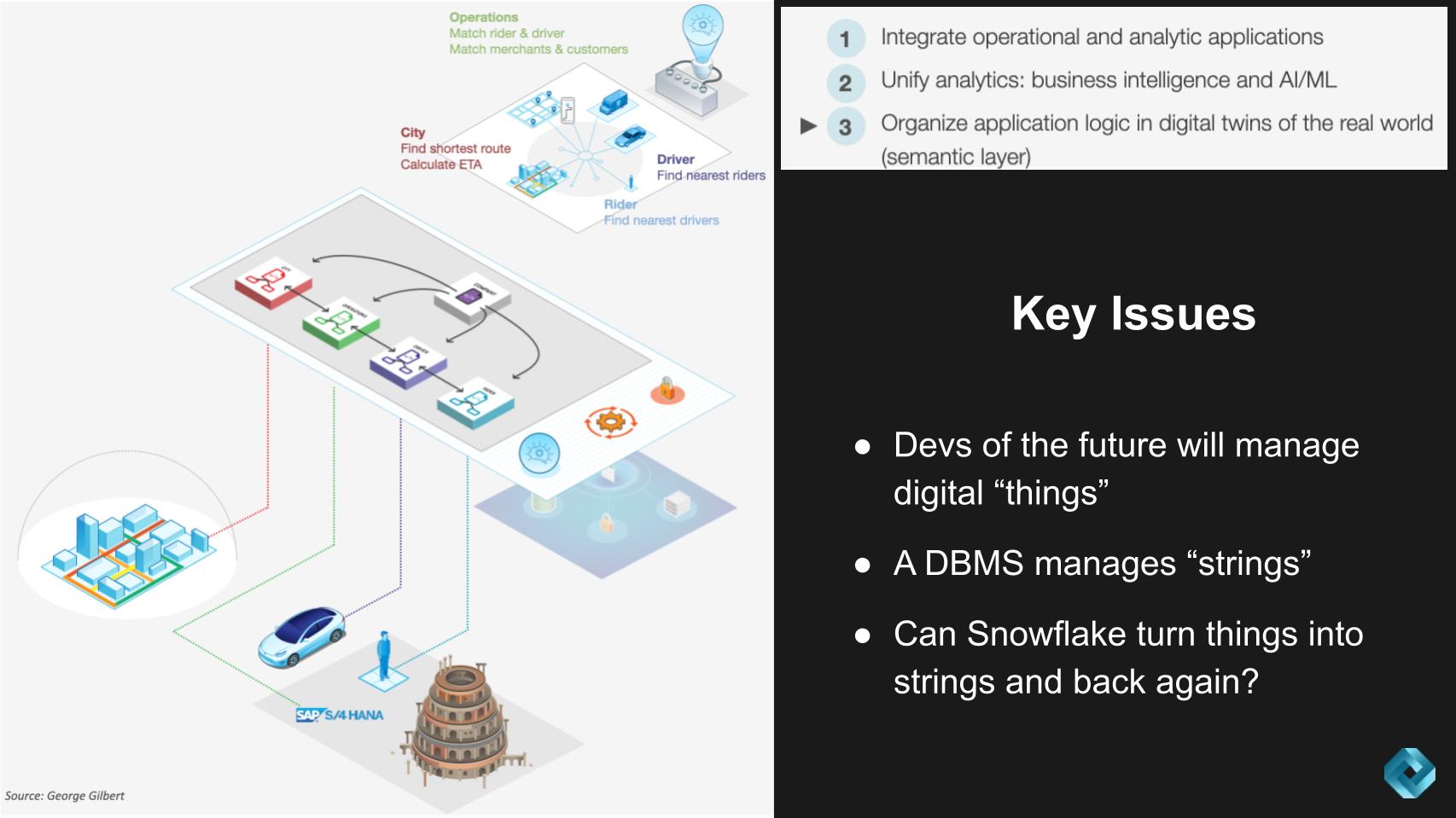

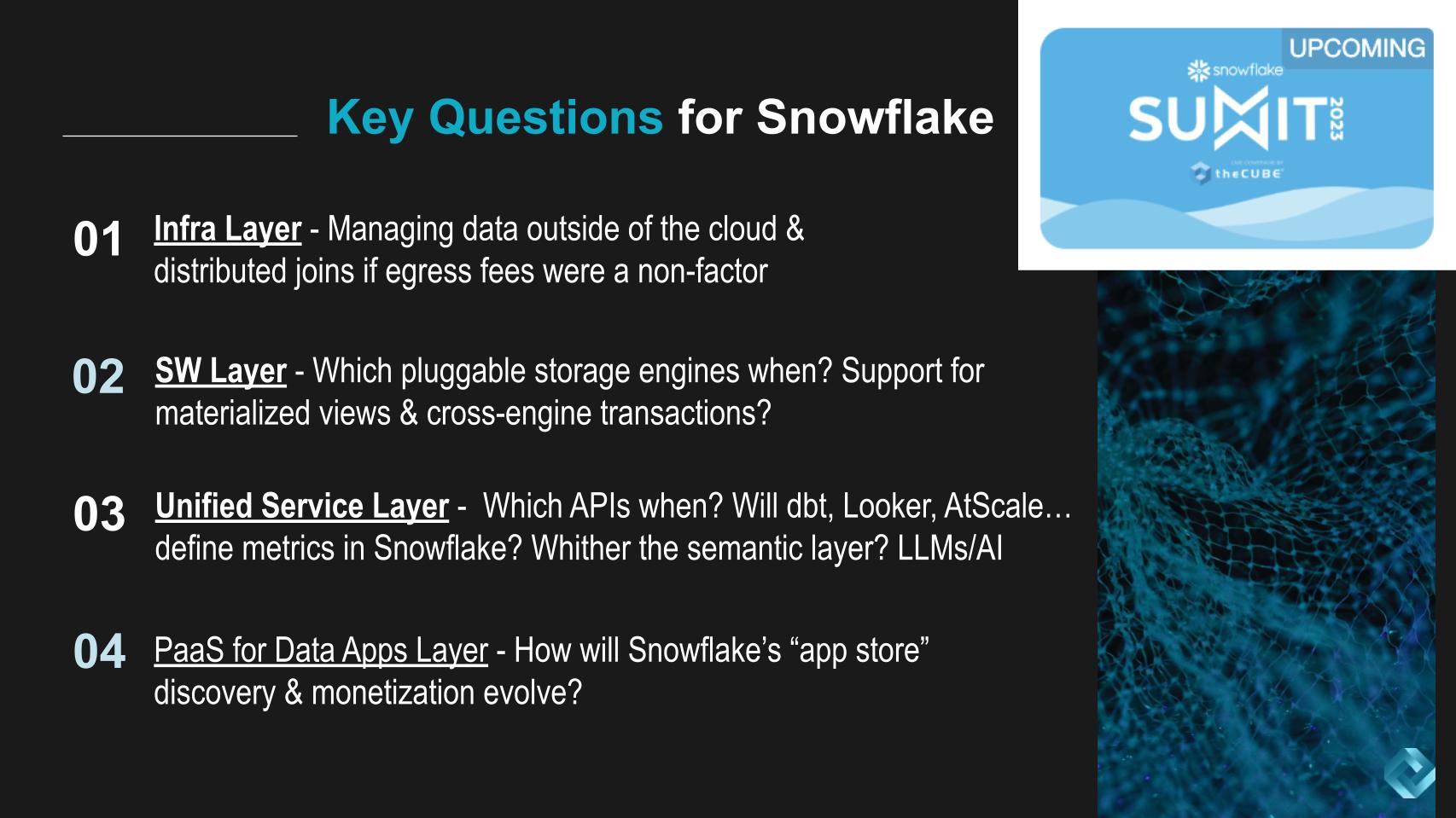

Snowflake Summit will reveal the future of data apps. Here’s our take.Our research and analysis point to a new modern data stack that is emerging where apps will be built from a coherent set of data elements that can be composed at scale. Demand for these apps will come from organizations that wish to create a digital twin of their business to represent people, places, things and the activities that connect them, to drive new levels of productivity and monetization. Further, we expect Snowflake Inc., at its upcoming conference, will expose its vision to be the best platform on which to develop this new breed of data apps. In our view, Snowflake is significantly ahead of the pack but faces key decision points along the way to its future to protect this lead. In this Breaking Analysis and ahead of Snowflake Summit later this month, we lay out a likely path for Snowflake to execute on this vision, and we address the milestones and challenges of getting there. As always, we’ll look at what the Enterprise Technology Research data tells us about the current state of the market. To do all this we welcome back George Gilbert, a contributor the theCUBE, SiliconANGLE Media’s video studio. A picture of the new modern data stackThe graphic below describes how we see this new data stack evolving. We see the world of apps moving from one that is process-centric to one that is data-centric, where business logic is embedded into data versus today’s stovepiped model where data is locked inside application silos.

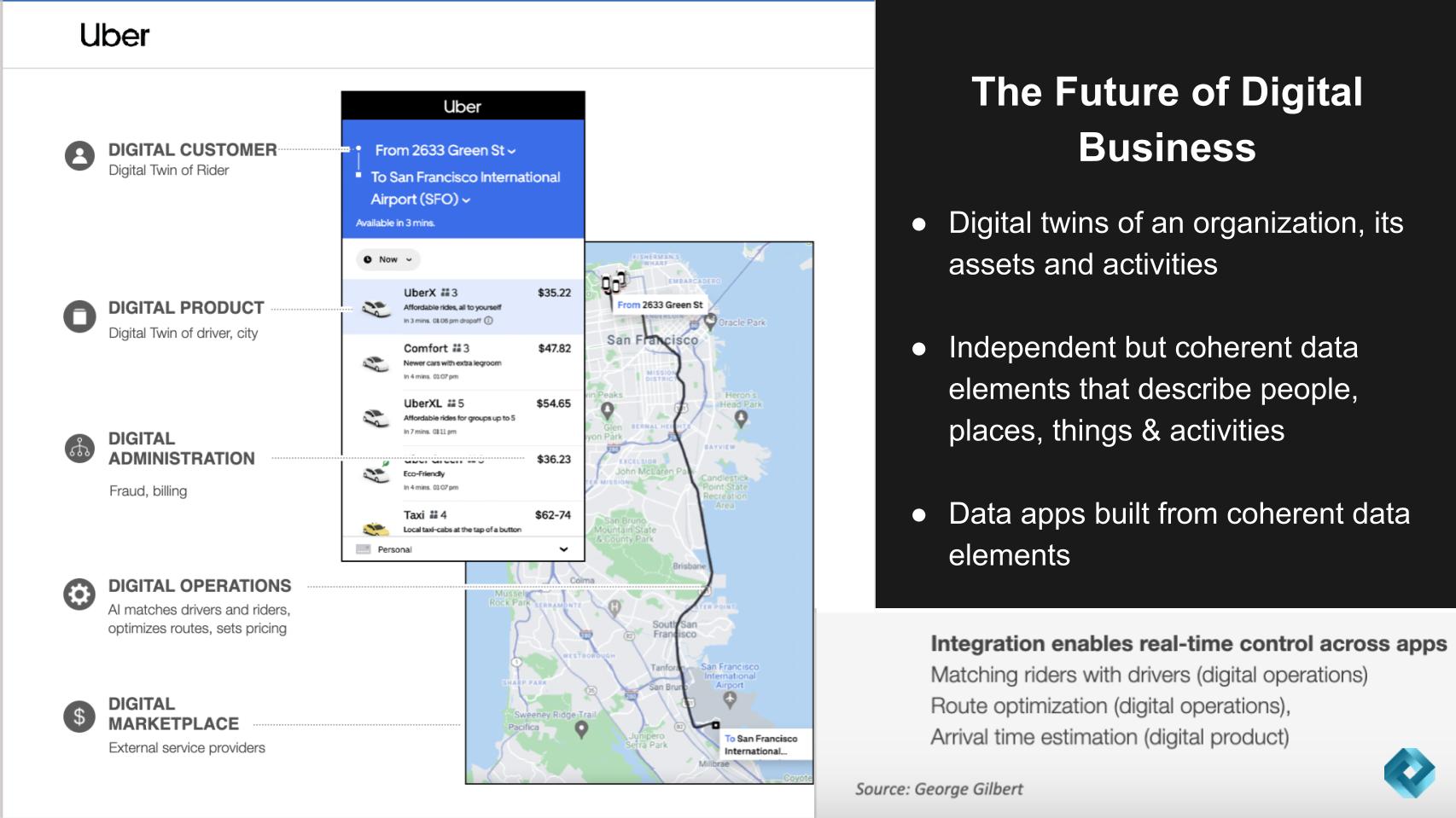

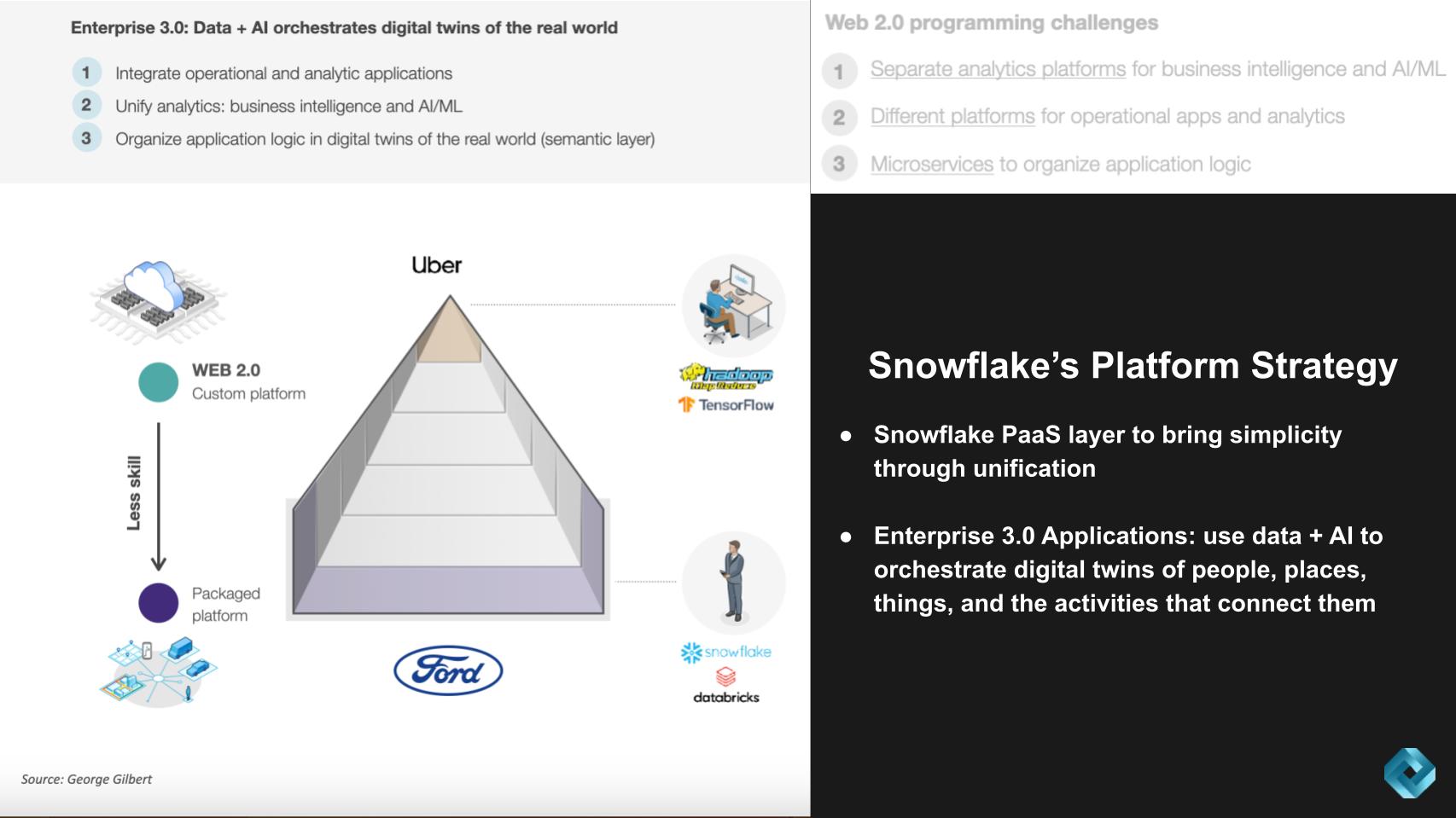

There are four layers to the emerging data stack supporting this premise. Starting at the bottom is the infrastructure layer, which we believe increasingly is being abstracted to hide underlying cloud and cross-cloud complexity — what we call supercloud. Moving up the stack is the data layer that comprises multilingual databases, multiple application programming interfaces and pluggable storage. Continuing up the stack is a unified services layer that brings together business intelligence and artificial intelligence/machine learning into a single platform. Finally there’s the platform-as-a-service for data apps at the top of the picture, which defines the overall user experience as one that is consistent and intuitive. Here’s a summary of Gilbert’s key points regarding this emerging stack: The picture above underscores a significant shift in application development paradigms. Specifically, we’re transitioning away from an era dominated by standalone Web 2.0 apps, featuring microservices and isolated databases, toward a more integrated and unified development environment. This new approach focuses on managing “people, places and things” – and describes a movement from data strings to tangible things. Key takeaways include: This suggests a compelling trend for stakeholders to watch. The advent of a more unified and integrated development environment is a game-changing evolution. It encourages stakeholders to consider solutions like Snowflake, which simplifies and enhances the development process, thereby promoting efficiency, reducing complexity and driving new levels of monetization. The key question is: Can Snowflake execute on this vision and can they move faster than competitors including the hyperscalers and Databricks Inc., which we’ll discuss later in this research. Listen to George Gilbert describe the new modern data stack. North star: Uber-like applications for all businessesLet’s revisit the Uber analogy that we’ve shared before.

The idea described above is that the future of a digital business will manifest to a digital twin of an organization. The example we use frequently is Uber for business where, in the case of Uber Technologies Inc., drivers, riders, destinations, estimated times of arrival, and the transactions that result from real-time activities have been built by Uber into a set of applications where all these data elements are coherent and can be joined together to create value, in real time. Here’s a summary of Gilbert’s take on why this is such a powerful metaphor: We believe the paradigm shift in application development will increasingly focus on applications being organized around real-world entities such as people, places, things and activities. This evolution reduces the gap between a developer’s conceptual thinking and the real-world business entities that need management. Key points include: We believe the vision presented by Gilbert has profound implications for those involved in application development. It’s important for stakeholders to realize that the new era of applications calls for a different kind of thinking – one that aligns with orchestrating real-world activities through a unified, coherent development environment. This has the potential to increase efficiency dramatically and enable more complex, autonomous operations. Watch and listen to George Gilbert describe the future of application development and the key enablers. Simplifying application development for mainstream organizationsA major barrier today is that only companies such as Uber, Google LLC, Amazon.com Inc. and Meta Platforms Inc. can build these powerful data apps. Starting 10-plys years ago, the technical teams at these companies have had to wrestle with MapReduce code and dig into TensorFlow libraries in order to build sophisticated models. Mainstream companies without thousands of world class developers haven’t just been locked out of the data apps game, they’ve been unable to remake their businesses as platforms. To emphasize our premise, we believe the industry generally and Snowflake specifically are moving to a world beyond today’s Web 2.0 programming paradigm where analytics and operational platforms are separate and the application logic is organized through microservices. We see a world where these types of systems are integrated and BI is unified with AI/ML. And a semantic layer organizes application logic to enable all the data elements to be coherent. In our view, a main thrust of Snowflake’s application platform strategy will be to simplify the experience dramatically for developers while maintaining the promise of Snowflake’s data sharing and governance model.

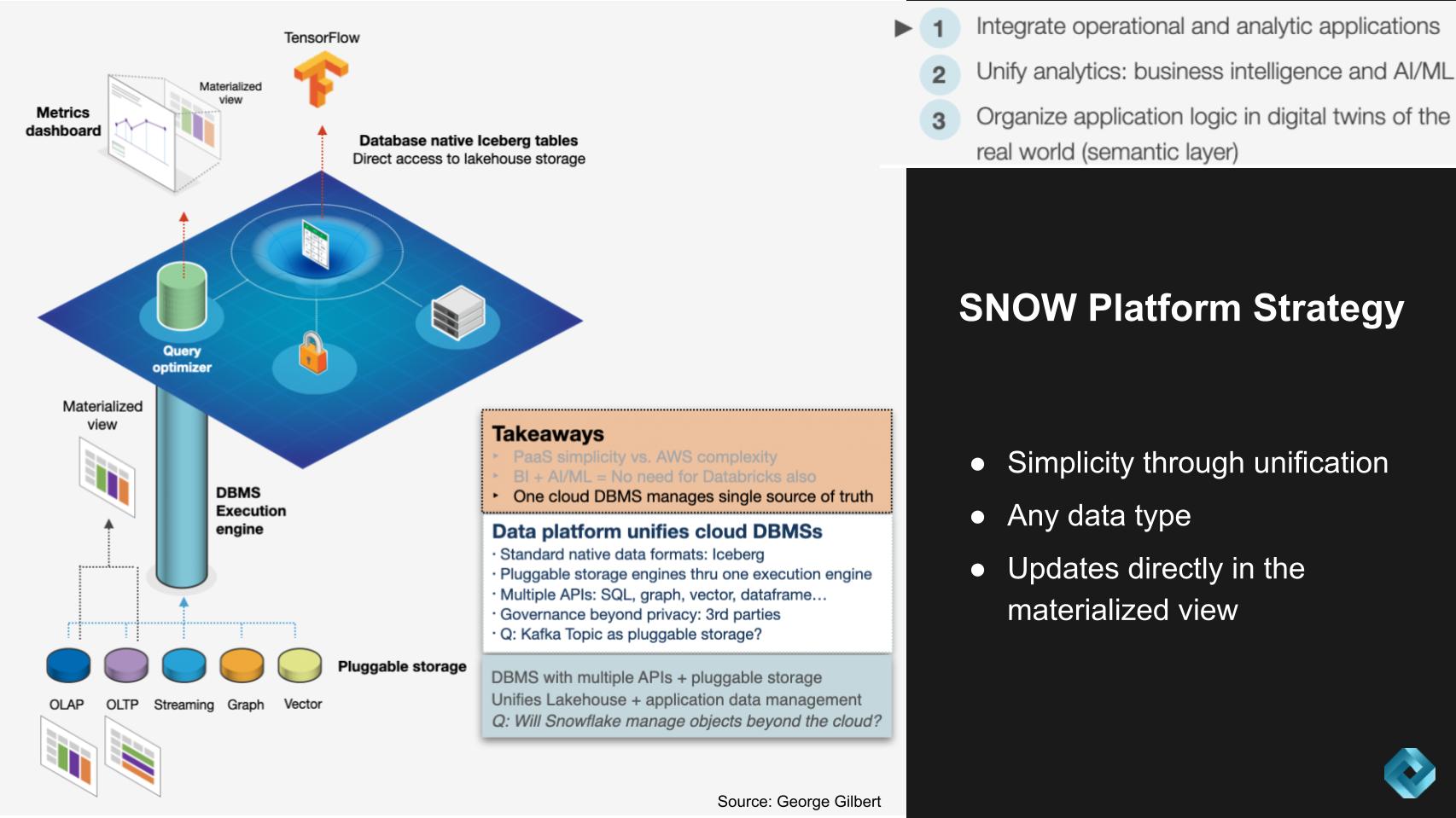

Here are the critical points from the discussion with Gilbert on this topic: Our research points to a vital trend for observers to monitor. The democratization of app development capabilities through platforms such as Snowflake and Databricks. This is fundamental in our view and the shift has the potential to level the playing field, allowing a wider range of organizations to harness the power of sophisticated data applications. Listen and watch George Gilbert explain today’s complexity challenge and the new model application development. Snowflake will continue to support more data typesSnowflake bristles at the idea that it is a data warehouse vendor. Although the firm got its foothold by disrupting traditional enterprise data warehouse markets, it has evolved into a true platform. We think a main thrust of that platform is an experience that promises consistency and governed data sharing on and across clouds. The company’s offering continues to evolve to support any data type through pluggable storage and the ability to extend this promise to materialized views, which implies a wider scope. We believe the next wave of opportunity for Snowflake (and its competitors) is building modern data apps. It’s clear to us that Snowflake wants to be the No. 1 place in the world to build these apps – the iPhone of data apps, if you will. But more specifically, Snowflake in our view wants to be the preferred platform, meaning the fastest time to develop, the most cost-effective, the most secure and most performant place to build and monetize data apps.

The following summarizes our view and the conversation with Gilbert on this topic: One of the core principles of this new modern data stack is supporting all data types and workloads, with Snowflake being a key player in this regard. We believe Snowflake is essentially revolutionizing the data platform, providing a more simplified, yet potent tool for developers to work with. Crucial points from our analysis include: Bottom Line: Our research points to a transformative shift in data management. In the legacy era when everything was on-premises, Oracle managed the operational data while Teradata and others, including Oracle, managed separate analytic data. Snowflake aspires to manage everything from that era and more. Snowflake’s pursuit of unification and simplification offers a considerable boon for developers and organizations alike, paving the way for a future where handling diverse data types and workloads becomes commonplace. This trend is one to watch closely, as it could profoundly shape the data management landscape. Watch this five-minute deep dive into where we see the Snowflake platform architecture heading. Unifying business intelligence with AI and machine learningUnifying BI and AI/ML is a critical theme of the new modern data stack. The slide below shows Snowflake on the left hand side, Databricks on the right, and we see these worlds coming together.

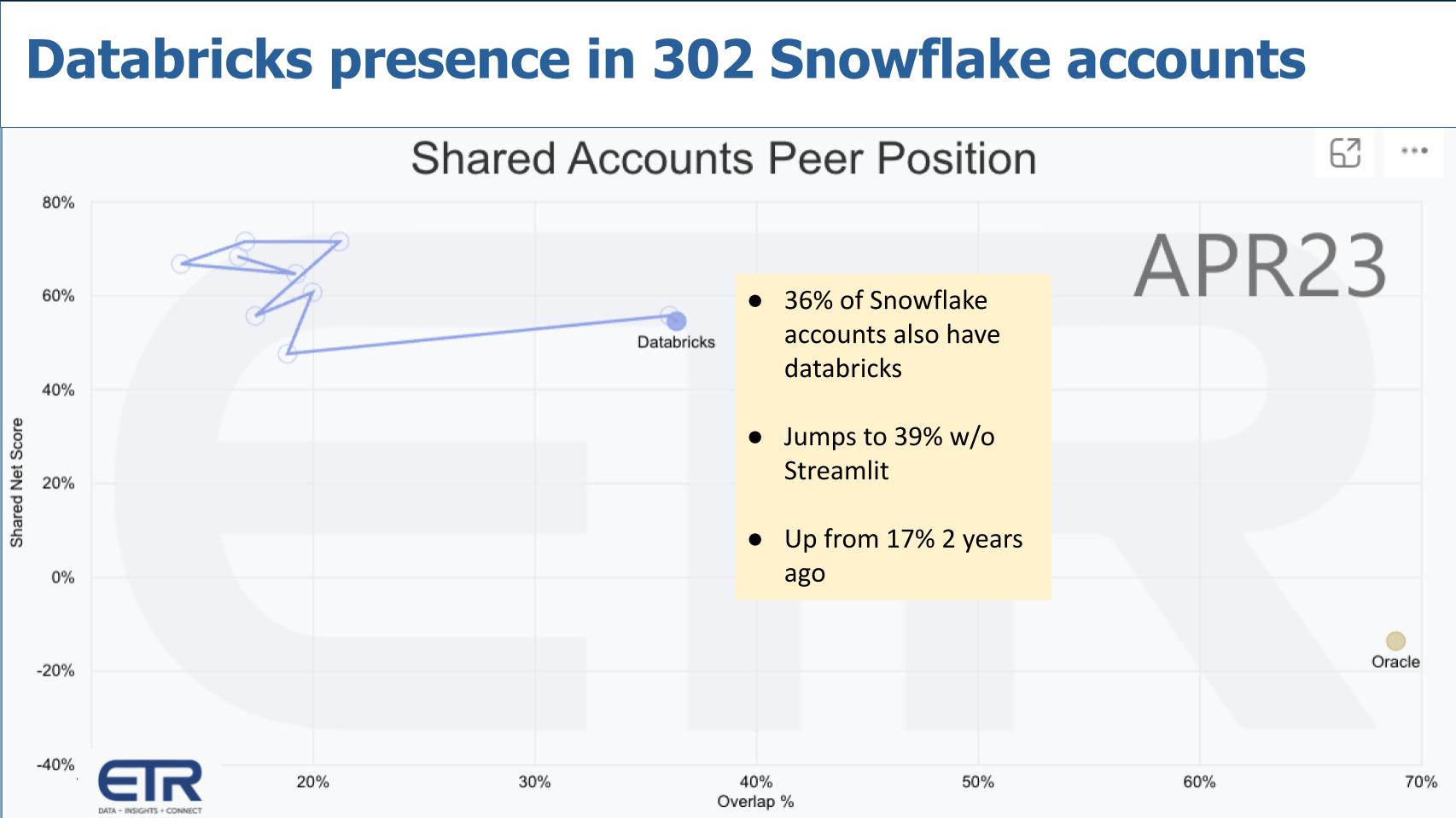

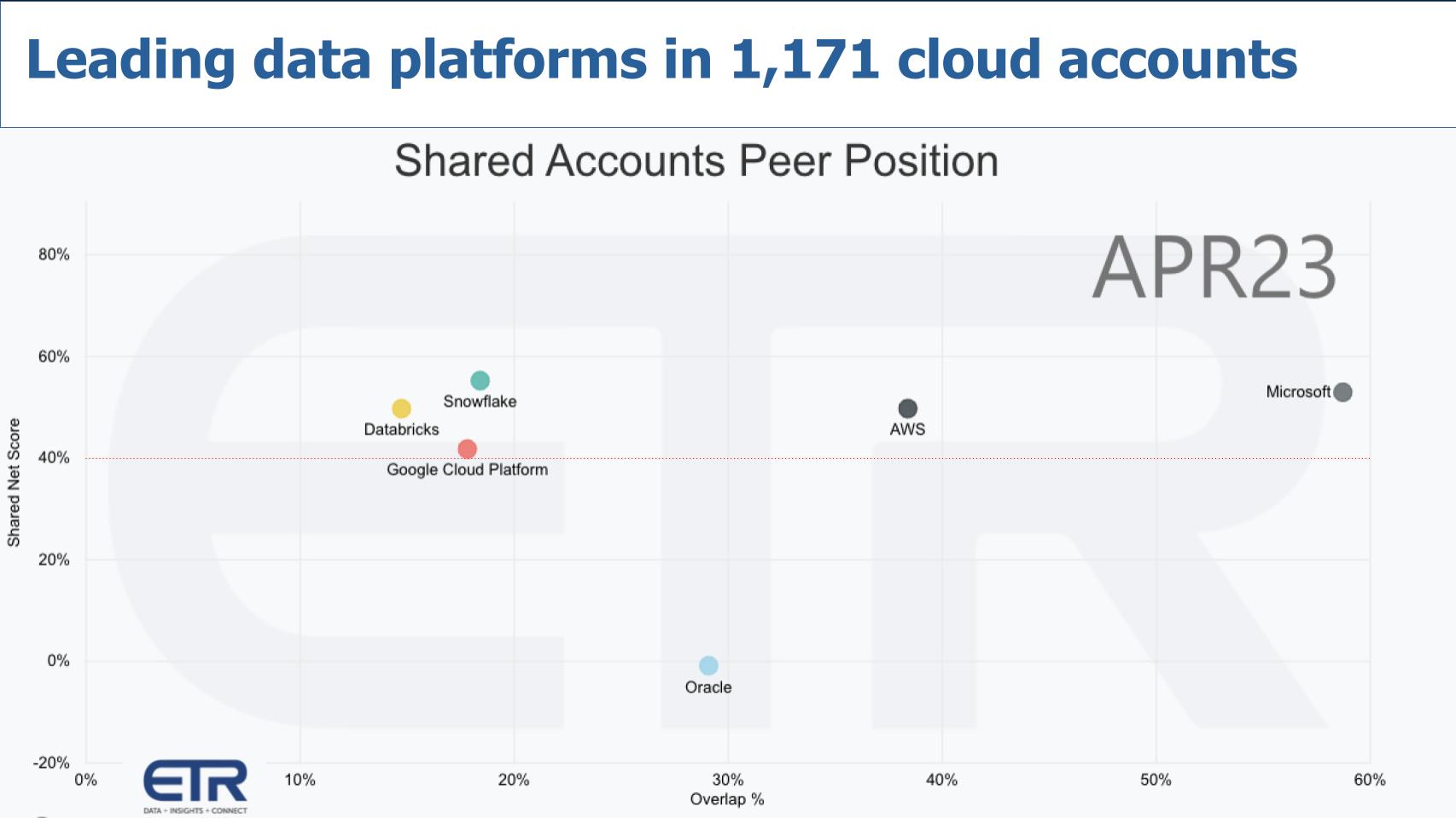

The important points of this graphic and the implications for Databricks, Snowflake and the industry in general can be summarized as follows: The dynamics between Snowflake and Databricks, two key players in the field of business intelligence and AI/ML, are evolving rapidly. Snowflake has made strides in order to try and eliminate the need for Databricks in some contexts, while Databricks is attacking Snowflake’s stronghold in analytics. To date, if a customer wanted the best BI and AI/ML support, they needed both Databricks and Snowflake. Each is trying to be a one-stop-shop. Customers should not have to move data between platforms to perform alternately BI and AI/ML. There has been talk that it would be harder for Snowflake to build Databricks’ technology than vice-versa. The assumption behind that thinking seems to be that BI is old, well-understood technology and AI/ML is newer and less well-understood. But that glosses over the immense difficulty of building a multiworkload, multimodel cloud-scale DBMS. It’s still one of the most challenging products in enterprise software. As Andy Jassy used to say, there’s no compression algorithm for experience. While others are catching up with BI workload support, Snowflake has moved on to transactional workloads and now pluggable data models. On the tools and API side, Snowflake is adding new APIs to support personas that weren’t as well-supported, such as data science and engineering. The dynamics between Snowflake and Databricks, two key players in the field of business intelligence and AI/ML, are evolving rapidly. Snowflake has made strides in order to try and eliminate the need for Databricks in some contexts, while Databricks is attacking Snowflake’s stronghold in analytics. Key points include: Our research indicates that Snowflake is making significant strides towards becoming a one-stop solution that can cater to all data types and workloads. This paradigm shift has the potential to substantially alter the dynamics of the industry, making it a top level trend to follow for analysts and businesses technologists. Basically you’ll be able to take your laptop-based, Python and Pandas data science and data engineering code, and scale it out directly to run on the Snowflake cluster with extremely high compatibility. The numbers we’ve seen are 90% to 95% compatibility. So you might have this situation where it’s more compatible to go from Python on your laptop to Python on Snowflake than Python on Spark. So that’s an example of one case where Snowflake is taking the data science tools that you used to have to go to Databricks for and supporting them natively on Snowflake. Watch this four-minute deep dive into how Snowflake is unifying BI and AI/ML workloads. Databricks’ presence in Snowflake accountsWe’re going to take a break from George’s excellent graphics and come back to the survey data. Let’s answer the following question: To what degree do Snowflake and Databricks customers overlap in the same accounts? This is the power of the ETR platform where we can answer these questions over a time series.

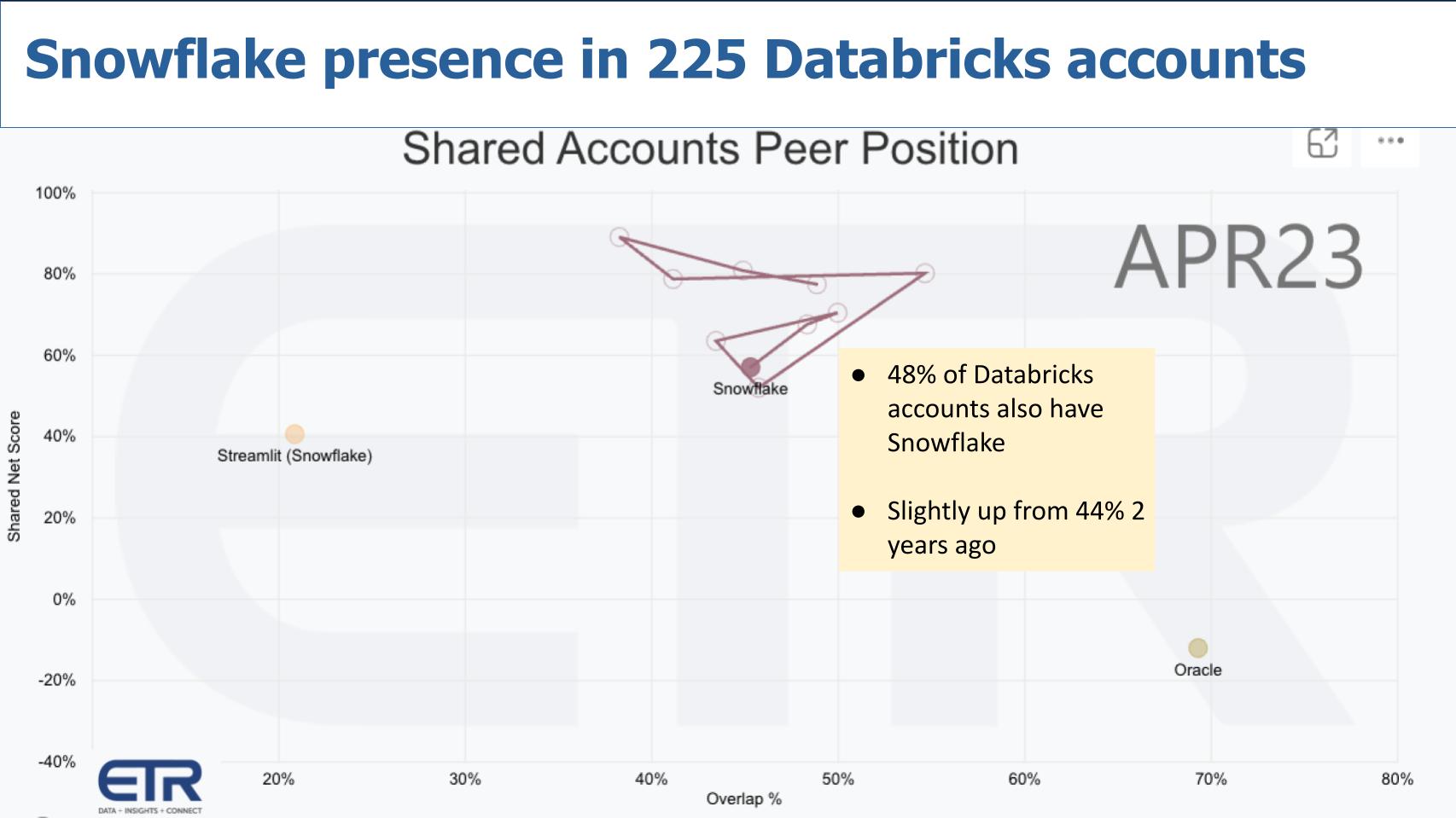

This chart above shows what the presence of Databricks is inside of 302 Snowflake accounts within the ETR survey base. The vertical axis is Net Score or spending momentum and the horizontal axis shows the overlap. We’re plotting Databricks and we added in Oracle for context. Thirty-six percent of those Snowflake accounts are also running Databricks. That jumps to 39% if you take Streamlit out of the numbers. And notably this figure is up from 17% two years ago and 14% two years ago without Streamlit. The point is Databricks’ presence inside of Snowflake accounts has risen dramatically in the past 24 months. And that’s a warning shot to Snowflake. As an aside, Oracle is present in 69% of Snowflake accounts. Snowflake’s presence in Databricks accountsNow let’s flip the picture — in other words, how penetrated is Snowflake inside Databricks accounts, which is what we show below. As you can see, that number is 48%, but that’s only up slightly from 44% two years ago. So Databricks, despite the growth of Snowflake over the past two years, is more prominent in terms of penetrating Snowflake accounts.

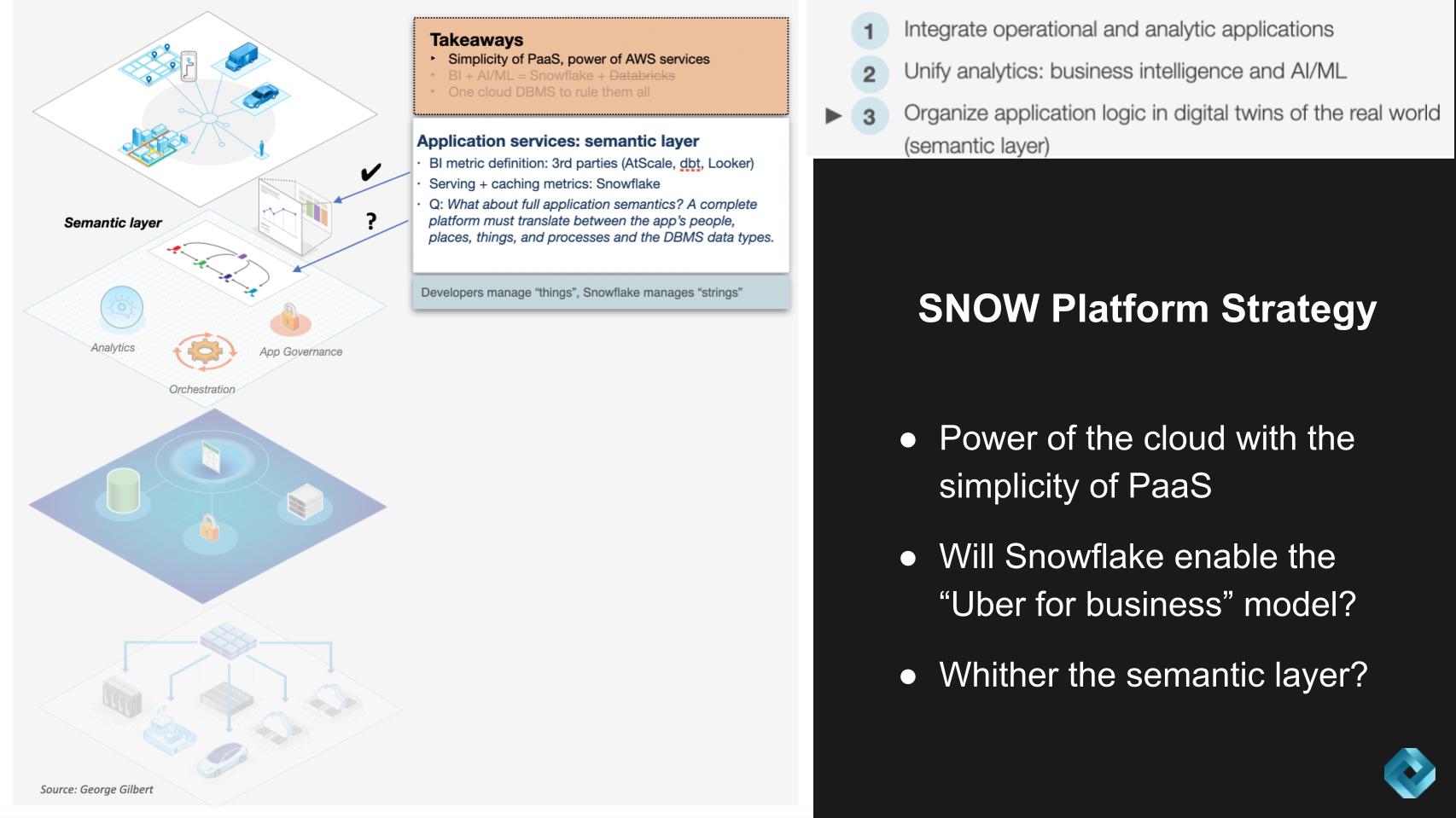

Here’s our summary of the overlap between these two platforms: We believe the maturity of organizations in terms of their data platform utilization is evolving rapidly. The increasing overlap between Snowflake and Databricks can be seen as a response to these companies’ realization that to extract maximum value from their data, they need to address both business intelligence and AI/ML workloads. Key takeaways from this analysis include: Our research indicates a dynamic environment where data platforms are progressively diversifying their capabilities. With Snowflake making notable progress in addressing data science and engineering workloads, organizations may need to reassess their data strategy to maximize value from these evolving platforms. Databricks is not standing still and its growth rates, based on our information, continue to exceed those of Snowflake, albeit from a smaller revenue base. The critical semantic layerLet’s now jump to the third key pillar, which brings us deeper into the semantic layer. The graphic below emphasizes the notion of organizing application logic into digital twins of a business. Our assertion is this fundamentally requires a semantic layer. This is one area where are research is inconclusive with regard to Snowflake’s plans. Initially we felt that Snowflake could take an ecosystem approach and allow third parties to manage the semantic layer. However, we see this as a potential blind spot to Snowflake and could pose the risk of losing control of the full data stack. A summary of our analysis follows:

The semantic layer is starting to emerge as BI metrics. These metrics, like bookings, billings and revenue, or more specific examples like Uber’s rides per hour, were traditionally managed by BI tools. These tools had to extract data from the database to define and update these metrics, which was a challenging and resource-intensive process. The First Step: Business Intelligence MetricsSemantic layer implementation: Snowflake in our view intends to take on the critically demanding task of supporting these metrics. It will cache the live, aggregated data that will allow BI tool users to slice and dice by dimension. We believe it plans to support third parties, such as AtScale Inc., dbt Labs Inc. and Google-owned Looker, to define the metrics and dimensions. Previously, such tools typically had to cache data extracts outside the DBMSs themselves. This approach fits with Snowflake’s business model of supporting an ecosystem of tools. In essence, we believe that that if Snowflake’s approach to handling the semantic layer within its platform is to leave that to third parties, it might be too narrow and potentially misses the broader implications and challenges of application semantics. Watch this three-minute clip describing the importance of the semantic layer and the risks to Snowflake of not owning it. Roadmap: Why Snowflake might vertically integrate the semantic layerLet’s double-click on this notion of the semantic layer and its importance. Further, we want to explore what it means for Snowflake in terms of who owns the semantic layer and how to translate the language of people, places and things into the language of databases.

In essence, we believe that if Snowflake’s approach to handling the semantic layer within its platform is to leave it to third parties, they may lose control of the application platform and their destiny. Snowflake aspires to build a platform for applications that handles all data and workloads. In the 1990s, Oracle wanted developers to code application logic in their tools and in the DBMS stored procedures. But Oracle lost control of the application stack as SAP, PeopleSoft and then the Java community around BEA all built a new layer for application logic. That’s the risk if Snowflake doesn’t get this layer right. Watch this two-minute riff on why Snowflake may want to vertically integrate the semantic layer. The leading data platforms all want a piece of the actionLet’s examine the horses on the track in this race. The Belmont stakes is this weekend. It’s a grueling, mile-and-a-half race… it’s not a sprint. Below we take a look at the marathon runners in the world of cloud data platforms.

The graphic above uses the same dimensions as earlier, Net Score or spending momentum on the Y axis and the N overlap within a filter of 1,171 cloud accounts in the ETR data set. That red line at 40% indicates a highly elevated Net Score. Microsoft just announced Fabric. By virtue of its size and simple business model (for customers), it is furthest up to the right in spending metrics and market presence. Not necessarily function but the model works. AWS is “gluing” together its various data platforms that are successful. Google has a killer product in Big Query, with perhaps the best AI chops in the business, but is behind in both momentum and market presence. Databricks and Snowflake both have strong spending momentum notwithstanding that Snowflake’s Net Score has been in decline since the January 2022 survey peak. Howeve, both Snowflake’s and Databricks’ Net Scores are highly elevated. Here’s our overall analysis of the industry direction: The big change is we believe the market will increasingly demand unification and simplification. It starts with unifying the data, so that your analytic data is in one place. So first, there’s one source of truth for analytic data. Then we’ll add to that one source of truth all your operational data. Then build one uniform engine for accessing all that data and then that unified application stack that maps people, places, things and activities to that one source of truth. Here’s our exam of the leading players: The overall theme of our analysis suggests that these major providers are working towards consolidating and streamlining their data architectures to facilitate a single source of truth, including both analytic and operational data, making it easier to build and manage data apps. However, each of these platforms has its unique set of challenges in achieving this goal. Listen and watch this four-minute discussion where George Gilbert goes through the maturity model for each vendor with respect to unifying data. Key questions to watch at Snowflake Summit 2023Let’s close with the key issues we’ll be exploring at Snowflake Summit and Databricks events, which take place the same week in late June. We’re going to start at the bottom layer of the stack in the chart below and work our way up the stack down on this slide.

Before we get into the stack, one related area we’re exploring is Snowflake’s strategy of managing data outside the cloud. It’s unclear how Snowflake plans to accommodate this data. We’ve seen some examples of partnerships with Dell Technologies Inc., but at physical distances there are questions about its capacity to handle tasks like distributed joins. We wonder how it would respond if data egress fees were not a factor. Moving to the stack: We expect to get more clues and possibly direct data from Snowflake (and Databricks) later this month. As well, we continue to research the evolution of cloud computing. We’re reminded of the Unix days, where the burden of assembling services fell on the developer. We see Snowflake’s approach as an effort to simplify this approach by offering a more integrated and coherent development stack. Lastly, Snowflake plays in a highly competitive landscape where companies such as Amazon, Databricks, Google and Microsoft constantly add new features to their platforms. Nonetheless, we believe Snowflake continues be ahead and has positioned itself as a company that can utilize the robust infrastructure of the cloud (primarily AWS) but simultaneously simplify the development of data apps. On balance, this will require a developer tools mindset and force Snowflake to move beyond its database comfort zone — a nontrivial agenda that could reap massive rewards for the company and its customers. Keep in touchMany thanks to George Gilbert for his collaboration on this research. Thanks to Alex Myerson and Ken Shifman on production, podcasts and media workflows for Breaking Analysis. Special thanks to Kristen Martin and Cheryl Knight, who help us keep our community informed and get the word out, and to Rob Hof, our editor in chief at SiliconANGLE. Remember we publish each week on Wikibon and SiliconANGLE. These episodes are all available as podcasts wherever you listen. Email david.vellante@siliconangle.com, DM @dvellante on Twitter and comment on our LinkedIn posts. Also, check out this ETR Tutorial we created, which explains the spending methodology in more detail. Note: ETR is a separate company from Wikibon and SiliconANGLE. If you would like to cite or republish any of the company’s data, or inquire about its services, please contact ETR at legal@etr.ai. Here’s the full video analysis: All statements made regarding companies or securities are strictly beliefs, points of view and opinions held by SiliconANGLE Media, Enterprise Technology Research, other guests on theCUBE and guest writers. Such statements are not recommendations by these individuals to buy, sell or hold any security. The content presented does not constitute investment advice and should not be used as the basis for any investment decision. You and only you are responsible for your investment decisions. Disclosure: Many of the companies cited in Breaking Analysis are sponsors of theCUBE and/or clients of Wikibon. None of these firms or other companies have any editorial control over or advanced viewing of what’s published in Breaking Analysis. Image: Dennis/Adobe Stock Your vote of support is important to us and it helps us keep the content FREE. One-click below supports our mission to provide free, deep and relevant content. Join our community on YouTube Join the community that includes more than 15,000 #CubeAlumni experts, including Amazon.com CEO Andy Jassy, Dell Technologies founder and CEO Michael Dell, Intel CEO Pat Gelsinger and many more luminaries and experts.“TheCUBE is an important partner to the industry. You guys really are a part of our events and we really appreciate you coming and I know people appreciate the content you create as well” – Andy Jassy THANK YOU The Convergence of Chat GPT, No-Code and Citizen DevelopmentIn recent years, the technology landscape has been revolutionized by three powerful concepts: no-code platforms, citizen development, and conversational AI like Chat GPT. According to Gartner, by the year 2024, 60% of app development will be done by Rapid application development tools i.e., no-code low-code. As these technologies evolve and converge, they empower individuals to create innovative solutions and drive digital transformation. This article dives into the significance of this convergence, exploring use cases that illustrate the potential for creativity and productivity when these technologies are combined. 1. Democratizing application developmentThe emergence of no-code platforms and citizen development has radically transformed the software development landscape. These innovations empower non-technical users to create their software applications, thus democratizing the application development process. By harnessing the power of no-code tools and Chat GPT’s AI capabilities, businesses can enable their workforce to tackle challenges, streamline processes, and improve efficiency. The Role of Chat GPT’s AI CapabilitiesBy integrating Chat GPT’s AI capabilities with no-code platforms, businesses can create intelligent and automated solutions for various tasks. This not only streamlines processes but also improves efficiency across the organization. Chat GPT’s AI can also assist employees, offering relevant and accurate responses based on natural language understanding. This enables companies to improve their employees’ overall experience and satisfaction. Use Case: Self-Service Employee Portal for HR ManagementAn HR manager with no coding background wants to create a self-service employee portal that allows employees to access important information and handle everyday tasks without constant HR intervention. The HR manager can use a no-code platform to build the self-service employee portal, selecting and customizing features such as payroll information, leave requests, and benefits enrollment. The visual interface and drag-and-drop functionality allow the HR manager to create an intuitive and user-friendly application. By integrating Chat GPT AI into the employee portal, the HR manager can create a virtual assistant that answers common employee queries and guides them through various processes. This reduces the workload for the HR department and provides employees with immediate and personalized assistance. 2. Enhancing Customer EngagementIn the ever-evolving digital landscape, businesses constantly seek innovative ways to enhance customer engagement and ensure their offerings remain relevant to their target audience. A powerful synergy of no-code platforms, citizen development, and Chat GPT can enable companies to create unique and interactive customer experiences that not only meet but anticipate the needs of their users. This article will explore how this combination can be used to build a no-code chatbot for a marketing team, showcasing the advantages of integrating these technologies into a company’s customer engagement strategy. Integrating Chat GPT for Real-Time Customer EngagementChat GPT, a state-of-the-art natural language processing model, offers powerful capabilities for understanding and generating human-like responses to text inputs. By integrating Chat GPT into a no-code chatbot, companies can provide users with real-time, personalized interactions that mimic human conversation. This enhanced level of engagement enriches the user experience and leads to increased customer satisfaction and loyalty. Creating a No-Code Chatbot: A Marketing Team Use CaseTo illustrate the potential of combining no-code platforms, citizen development, and Chat GPT, let’s consider a marketing team that wants to create a chatbot for their website. The team can leverage a no-code platform to design and deploy the chatbot, customizing the interface to align with its brand identity and desired user experience. Next, by integrating Chat GPT’s natural language processing capabilities, the chatbot can understand and engage with visitors in real-time, answering questions, providing product information, and offering personalized recommendations. The combination of these technologies allows the marketing team to develop a chatbot that provides immediate support to website visitors and gathers valuable insights into customer preferences and behavior. This information can be used to refine marketing strategies, improve product offerings, and tailor future customer interactions. 3. Streamlining internal communication and collaborationThese converging technologies can facilitate better organizational internal communication, collaboration, and knowledge sharing. By integrating no-code tools with Chat GPT, employees can efficiently access information and share insights. Integrating No-Code Tools with Chat GPT for Enhanced CollaborationBy integrating no-code tools with Chat GPT, employees can create custom solutions that help streamline internal communication and collaboration. This can be achieved through the following steps: Imagine a team working on a complex project with multiple stakeholders, deadlines, and deliverables. Managing such projects can be challenging, with team members often needing help to access critical information or share insights in a timely manner To address these challenges, the team can create a no-code project management tool that integrates Chat GPT. This solution would provide the following benefits: The convergence of no-code platforms, citizen development, and Chat GPT empowers individuals to experiment with new ideas and innovate rapidly. Businesses can harness this creative potential to solve complex problems and stay competitive. Harnessing the Power of Convergence for Rapid InnovationA startup looking to build a new product can greatly benefit from this convergence of no-code platforms, citizen development, and Chat GPT. The startup can begin by building a no-code prototype of its product using a suitable no-code platform. This allows the team to quickly create a functional product version without spending time and resources on traditional software development processes. The result is a faster time-to-market and reduced development costs. Incorporating Chat GPT for User InteractionsOnce the prototype is built, the team can integrate Chat GPT to simulate user interactions and automate specific tasks. For example, Chat GPT can generate content, answer user queries, or guide users through the application’s features. This provides a more interactive and engaging user experience. With the no-code prototype and Chat GPT integration in place, the team can now gather user feedback to identify improvement areas and further refine their concept. This iterative approach, made possible by the agility of no-code platforms, ensures the final product aligns with user needs. By leveraging the power of no-code platforms, citizen development, and Chat GPT, startups can significantly reduce product-to-market time. This competitive advantage allows businesses to stay ahead of rivals and quickly adapt to the ever-evolving market landscape. 5. Enhancing data analytics and visualizationData analytics and visualization play a crucial role in modern businesses, allowing decision-makers to identify trends, monitor key performance indicators (KPIs), and make informed choices. However, navigating complex datasets and deriving insights can be challenging, especially for those who have a technical background. Combining no-code tools with Chat GPT offers a powerful solution to simplify data analysis and visualization processes while providing valuable insights. No-Code Tools and Chat GPT for Data Analytics and VisualizationNo-code tools allow users to create applications, dashboards, and data visualizations without coding expertise. These tools have democratized access to data analysis and visualization, enabling even non-technical stakeholders to derive insights from data. Popular no-code data analytics and visualization tools include Tableau, Microsoft Power BI, and Google Data Studio. Chat GPT,a large language model developed by OpenAI that can provide contextual explanations and predictions based on the provided data. By integrating Chat GPT into data analytics and visualization workflows, businesses can benefit by analyzing complex datasets and generating human-like responses. This feature enables decision-makers to better understand the data and make better-informed choices. Use Case: No-Code Dashboards and Chat GPT IntegrationCompany can leverage no-code dashboard to display KPIs other essential data while integrating Chat GPT for contextual explanations and predictions. This combination offers multiple benefits for decision-makers: a). Simplified Data Analysis: No-code tools allow users to create interactive dashboards that enable them to visualize and explore data without the need for coding expertise. A user-friendly interface will enable decision-makers to navigate the data and identify trends and patterns easily. b). Contextual Explanations: Chat GPT can analyze the displayed data and generate human-like explanations, helping decision-makers understand the context behind the numbers. This feature ensures that even non-technical stakeholders can comprehend the significance of the data and make informed decisions. c). AI-powered Predictions: Chat GPT can leverage historical data to predict future trends, enabling decision-makers to plan and adapt their strategies accordingly. By integrating these predictions into no-code dashboard, users can visualize future scenarios and assess the potential impact on the business. ConclusionAs no-code platforms, citizen development, and Chat GPT converge, the potential for innovation and digital transformation is vast. By empowering individuals to create solutions without coding expertise, these technologies democratize application development and break down barriers to entry. As businesses recognize the value of this convergence, productivity, creativity, and problem-solving across industries can surge. The post The Convergence of Chat GPT, No-Code and Citizen Development appeared first on ReadWrite. |

Whilst it is very hard task to choose reliable exam questions and answers resources regarding review, reputation and validity because people get ripoff due to choosing incorrect service. Killexams make it sure to provide its clients far better to their resources with respect to exam dumps update and validity. Most of other peoples ripoff report complaint clients come to us for the brain dumps and pass their exams enjoyably and easily. We never compromise on our review, reputation and quality because killexams review, killexams reputation and killexams client self confidence is important to all of us. Specially we manage killexams.com review, killexams.com reputation, killexams.com ripoff report complaint, killexams.com trust, killexams.com validity, killexams.com report and killexams scam. If perhaps you see any bogus report posted by our competitor with the name killexams ripoff report complaint internet, killexams.com ripoff report, killexams.com scam, killexams.com complaint or something like this, just keep in mind that there are always bad people damaging reputation of good services due to their benefits. There are a large number of satisfied customers that pass their exams using killexams.com brain dumps, killexams PDF questions, killexams practice questions, killexams exam simulator. Visit our test questions and sample brain dumps, our exam simulator and you will definitely know that killexams.com is the best brain dumps site.

Which is the best dumps website?

You bet, Killexams is 100 % legit and fully well-performing. There are several benefits that makes killexams.com real and legitimized. It provides up-to-date and 100 % valid exam dumps filled with real exams questions and answers. Price is small as compared to many of the services on internet. The questions and answers are up-to-date on ordinary basis through most recent brain dumps. Killexams account arrangement and product or service delivery can be quite fast. Data downloading can be unlimited and extremely fast. Assistance is avaiable via Livechat and Contact. These are the characteristics that makes killexams.com a sturdy website that come with exam dumps with real exams questions.

Is killexams.com test material dependable?

There are several Questions and Answers provider in the market claiming that they provide Actual Exam Questions, Braindumps, Practice Tests, Study Guides, cheat sheet and many other names, but most of them are re-sellers that do not update their contents frequently. Killexams.com is best website of Year 2023 that understands the issue candidates face when they spend their time studying obsolete contents taken from free pdf download sites or reseller sites. Thats why killexams.com update Exam Questions and Answers with the same frequency as they are updated in Real Test. Exam dumps provided by killexams.com are Reliable, Up-to-date and validated by Certified Professionals. They maintain Question Bank of valid Questions that is kept up-to-date by checking update on daily basis.

If you want to Pass your Exam Fast with improvement in your knowledge about latest course contents and topics of new syllabus, We recommend to Download PDF Exam Questions from killexams.com and get ready for actual exam. When you feel that you should register for Premium Version, Just choose visit killexams.com and register, you will receive your Username/Password in your Email within 5 to 10 minutes. All the future updates and changes in Questions and Answers will be provided in your Download Account. You can download Premium Exam Dumps files as many times as you want, There is no limit.

Killexams.com has provided VCE Practice Test Software to Practice your Exam by Taking Test Frequently. It asks the Real Exam Questions and Marks Your Progress. You can take test as many times as you want. There is no limit. It will make your test prep very fast and effective. When you start getting 100% Marks with complete Pool of Questions, you will be ready to take Actual Test. Go register for Test in Test Center and Enjoy your Success.

TCRN exam tips | Google-AVA exam dumps | DVA-C01 braindumps | PMI-002 dump | 2B0-104 test prep | RCDDv14 cheat sheets | 4A0-102 PDF Download | 156-315-80 exam papers | 71201X Practice Test | OCN test practice | DASM online exam | NCC exam questions | CCSPA PDF Questions | Cloud-Digital-Leader exam questions | TOEFL exam dumps | C2090-558 free pdf | CBEST real questions | PSM-I test exam | 4H0-712 braindumps | AngularJS mock questions |

DA-100 - Analyzing Data with Microsoft Power BI guide

DA-100 - Analyzing Data with Microsoft Power BI study help

DA-100 - Analyzing Data with Microsoft Power BI exam dumps

DA-100 - Analyzing Data with Microsoft Power BI guide

DA-100 - Analyzing Data with Microsoft Power BI Dumps

DA-100 - Analyzing Data with Microsoft Power BI information source

DA-100 - Analyzing Data with Microsoft Power BI PDF Braindumps

DA-100 - Analyzing Data with Microsoft Power BI answers

DA-100 - Analyzing Data with Microsoft Power BI exam contents

DA-100 - Analyzing Data with Microsoft Power BI PDF Download

DA-100 - Analyzing Data with Microsoft Power BI teaching

DA-100 - Analyzing Data with Microsoft Power BI test

DA-100 - Analyzing Data with Microsoft Power BI Test Prep

DA-100 - Analyzing Data with Microsoft Power BI learning

DA-100 - Analyzing Data with Microsoft Power BI information hunger

DA-100 - Analyzing Data with Microsoft Power BI exam contents

DA-100 - Analyzing Data with Microsoft Power BI course outline

DA-100 - Analyzing Data with Microsoft Power BI guide

DA-100 - Analyzing Data with Microsoft Power BI PDF Download

DA-100 - Analyzing Data with Microsoft Power BI Exam Cram

DA-100 - Analyzing Data with Microsoft Power BI test

DA-100 - Analyzing Data with Microsoft Power BI Exam Cram

DA-100 - Analyzing Data with Microsoft Power BI exam dumps

DA-100 - Analyzing Data with Microsoft Power BI information hunger

DA-100 - Analyzing Data with Microsoft Power BI Dumps

DA-100 - Analyzing Data with Microsoft Power BI cheat sheet

DA-100 - Analyzing Data with Microsoft Power BI PDF Download

DA-100 - Analyzing Data with Microsoft Power BI braindumps

DA-100 - Analyzing Data with Microsoft Power BI Free Exam PDF

DA-100 - Analyzing Data with Microsoft Power BI Practice Test

DA-100 - Analyzing Data with Microsoft Power BI learn

DA-100 - Analyzing Data with Microsoft Power BI PDF Dumps

DA-100 - Analyzing Data with Microsoft Power BI dumps

DA-100 - Analyzing Data with Microsoft Power BI learn

DA-100 - Analyzing Data with Microsoft Power BI Exam Cram

DA-100 - Analyzing Data with Microsoft Power BI study help

DA-100 - Analyzing Data with Microsoft Power BI PDF Questions

DA-100 - Analyzing Data with Microsoft Power BI syllabus

Other Microsoft Exam Dumps

AZ-801 free pdf | PL-900 mock questions | AZ-303 practice questions | MB-500 dumps | DP-500 exam prep | MS-600 test questions | MS-740 pdf download | MOFF-EN questions and answers | MB-220 real questions | SC-900 cram | MB-330 Exam Questions | AZ-700 VCE | AZ-400 mock exam | DP-900 exam tips | PL-100 free prep | MD-101 english test questions | PL-200 practice exam | MD-100 practice exam | MS-220 practice questions | MS-203 cbt |

Best Exam Dumps You Ever Experienced

CISSP model question | 500-215 test prep | VCS-325 Question Bank | 920-270 exam prep | ICBB questions and answers | CSET Latest Topics | NSE4_FGT-7.2 real questions | PSE-Strata practical test | COF-C01 braindumps | 78201X online exam | SOFE-AFE online exam | NACE-CIP2-001 brain dumps | 2B0-011 dump | CEN mock exam | A00-240 PDF Download | SDM-2002001040 sample test questions | ACE dumps | NLN-PAX Free PDF | Tableau-Desktop-Specialist exam preparation | SAT study guide |

References :

https://sites.google.com/view/killexams-da-100-study-guide

https://killexamsprectictest.blogspot.com/2021/01/da-100-analyzing-data-with-microsoft.html

https://drp.mk/i/FVdL1rf3MN

https://files.fm/f/h85hhwqe7

https://www.instapaper.com/read/1400227281

Similar Websites :

Pass4sure Certification Exam dumps

Pass4Sure Exam Questions and Dumps